Want to get more out of your website? Out of your landing pages? Out of your pay-per-click ads? Do you want to know what’s working and what’s wrong with your digital marketing campaigns?

Well, this article explains exactly how to find the answers to all of those questions. Stick with me and you will become the guru of online optimization. Your name will be whispered in hushed and reverent tones around the office.

This article discusses in plain terms one of the least understood and most important aspects of digital marketing: statistical interpretation.

Now wait, give me a minute before you hit the back button. I’m not going to drone on about equations or theories. This is nuts and bolts information—statistics for the real world.

Believe it or not, your math teachers were right when they told you math would important after graduation. In today’s data-driven world, math is central to business success and online profitability. However, while most of the calculations are now done by computers, most people still don’t know how to really make the most out of their data.

Why statistical significance matters

To optimize your digital marketing, you have to conduct tests. More importantly, you have to know how to evaluate the results of your test.

I can’t tell you how many times I’ve seen marketers (including conversion rate optimization experts!) point to a positive result and say, “Look at what I figured out!”

Unfortunately, in the long run, the promised results often don’t pan out.

Optimizing your marketing is about more than just getting a little green arrow on your screen. It’s a balance between human intuition and statistical data that drives the kind of results your digital marketing needs to succeed.

At this point you’re probably thinking, Okay, I get it. Testing is important. But a test is only as valuable as its interpretation—how do I know if I’m getting meaningful results?

That’s a good question. Actually, it’s an incredibly important question. To answer it, though, we need to ask the question a bit differently.

The real question is, “How do you know if you have statistically meaningful results?”

To get actionable information out of your tests, you need to understand 3 key aspects of your data: variance, confidence and sample size.

1. Variance

The first thing you need to understand about your data is its inherent variability. In a nutshell, variance is how much your numbers will change without any intervention from you.

That’s nice, why should I care?

Let’s look at a practical example. Since conversion rate is one of the most important measures of digital marketing success, we’ll use conversion rate optimization as our model scenario.

Say your average quarterly conversion rates for a year are 3%, 2%, 2.4% and 2.6%. That means your conversion rates vary between 2% and 3%.

This variance could be due to changes in competition, economic factors, seasonal effects or any other market influences, but your average conversion rate always hovers between 2% and 3%.

Now, imagine you eliminate the sidebar from your website.

For 3 months, you send half of your traffic to the original page and half to the sidebar-less page. 2% of the traffic sent to the original page converts. 2.8% of the traffic to variant page converts.

Wahoo! Getting rid of the sidebar increased your conversion rate by 40%! That’s great, right?

Well, maybe (we’re talking statistics here, so get used to that answer).

Unfortunately, both numbers fall within the normal variance of your data. Yes, a 2.8% conversion rate is 40% higher than a 2% conversion rate, but maybe the people you sent to the sidebar-less page just happened to convert 40% better due to external factors.

After all, up to 3% of your traffic normally converts anyways.

Let’s try that again…

Now, say you try adding a lightbox to your page and run the test again. Same parameters: send half of your traffic to the original and half to the variant for 3 months.

This time, the conversion rate for the original is 2.5% and the conversion rate for the variant is 3.5%.

It’s a 40% improvement again. This time, though, the conversion rate is 17% higher than the highest 3-month conversion rate you’ve ever seen with your site.

Is the difference potentially meaningful? Yes!

We usually define variance in terms of wobble around the middle. In this example, our average conversion rate varies from 2-3%, so our variance would be 2.5 ± 0.5%.

Add 0.5% to 2.5% and you get 3%. Subtract 0.5% from 2.5% and you get 2%.

Make sense?

A word to the wise

There are a lot of ways to determine variance.

One easy way is to run an A/A test. You just send half your traffic to a page and the other half of the traffic to an identical page and measure the difference.

Unfortunately, while you’d think that a lot of software packages would have a built-in way to track variance, they usually leave such things up to you.

Running an A/A test is an important way to both determine your site variance and check to see if your software is working properly. For example, if your conversion rate is significantly higher for one arm of your test, you probably have a problem.

Overall, you need to know your variance so that you can decide the value of your results. No matter how much money you might make off of a 40% increase in conversion rate, if it falls within normal variance, you don’t have any evidence that your change actually made a difference.

2. Confidence

Now that I’ve made you nervous about the validity of your results, let’s talk about confidence.

In statistics, confidence is an estimate of the likelihood that a result was due to chance. It’s possible that you just happened to send a bunch of more-likely-to-convert-than-normal visitors to your lightbox page in the previous example, right?

So, the question is, how confident are you in your results?

I have a feeling we’re going to talk about standard deviation…

In statistics, we look at populations (like the visitors to your site) in terms of standard deviation.

Standard deviations cut populations into segments based on their likelihood to convert. Remember the Bell curve from school? Well, that’s basically what’s happening on your site.

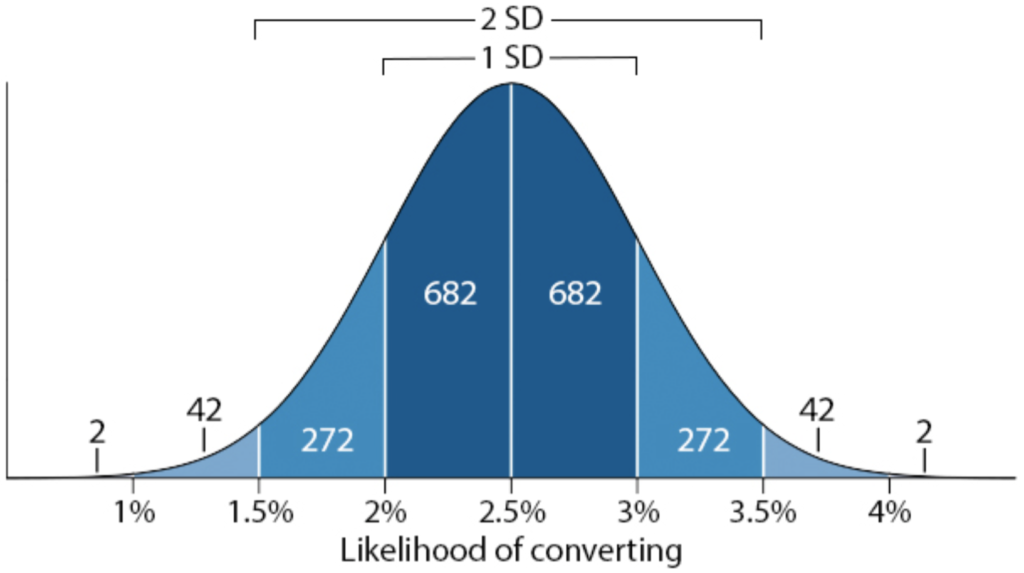

If your site from the previous example gets 2,000 visitors in 3 months (hopefully not actually the case, but it works for the example), here is how their likelihood of converting breaks down:

As you can see from the graph, the majority of your visitors (1364 or ~68%) convert 2-3% of the time. That’s your first standard deviation (SD).

There are also other 2 other main groups of visitors to your site. Some (272 or ~13.5%) convert 3-3.5% of the time. Another 272 convert 1.5-2% of the time.

That’s the second standard deviation, which represents about 27% of your traffic (544 people).

All told, these two standard deviations represent about 95% of your site traffic. In other words, out of the 2,000 visitors to your site, approximately 1,900 of them will convert between 1.5% and 3.5% of the time.

Okay, but what’s my confidence?

Since only 44 of your 2,000 visitors convert more than 3.5% of the time (and on the other end of the spectrum, 44 convert less than 1.5% of the time), it seems unlikely that the lightbox page would average a 3.5% conversion rate simply by chance.

As a result, you can be about 70% confident that the lightbox page did not convert better by mere luck.

Where did you get that number?

To understand how confidence is calculated, we need to look at the situation we created with our test.

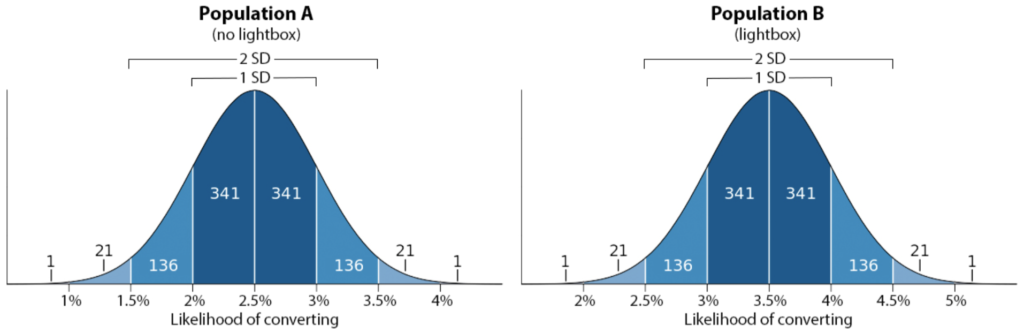

By sending our traffic to two different versions of our page, we created 2 traffic populations. Population A (1,000 visitors) went to the page without the lightbox. Population B (the other 1,000 visitors) went to the page with the lightbox.

Each population has its own Bell curve and is normally distributed around its average conversion rate—2.5% for Population A and 3.5% for Population B.

Now, if you notice, both populations actually cover some of the same conversion rate range. Some of the traffic in Population A and some of the traffic in Population B converts between 2% and 4% of the time.

That means there is overlap between the 2 populations.

If there’s overlap, there’s a chance that the difference between the populations is due to luck.

Think about it. If the two populations overlap at all, there is a possibility that Population B randomly has low converting people in it and/or Population A is randomly made up of conversion-prone visitors.

Remember, Population A and Population B are just samples of the total population. It’s completely possible that they are skewed samples.

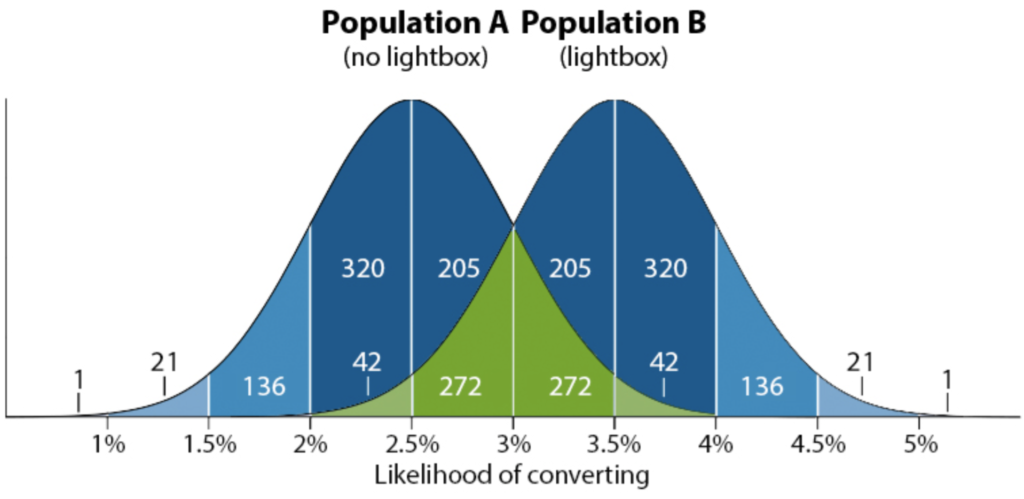

But what are the odds of that? Let’s look at the overlap of the 2 populations and add up how many visitors fall into the overlap.

In this case, about 632 of your 2,000 visitors convert between 2% and 4% of the time—no matter which page you send them to. As a percentage, this means that 31.6% of your traffic could have belonged in either group without changing your results.

Therefore, you can only be about 70% confident that your results indicate a true difference in conversion rate between the lightbox page and the lightbox-less page.

In practical terms, 70% confidence means our 40% improvement in conversion rate could occur randomly 1 out of every 3 times we run the test!

Say what?

Despite the fact that we’ve never converted this well before, we still have a 31.6% chance that our results were produced by nothing more relevant than a cosmic hiccup. How could that be?

This is a perfect example of the importance of confidence and variance.

Your results might look impressive, but if you have a lot of variability in your baseline results, it’s hard to have much faith in the outcome of your tests.

So, how do I get results I can use?

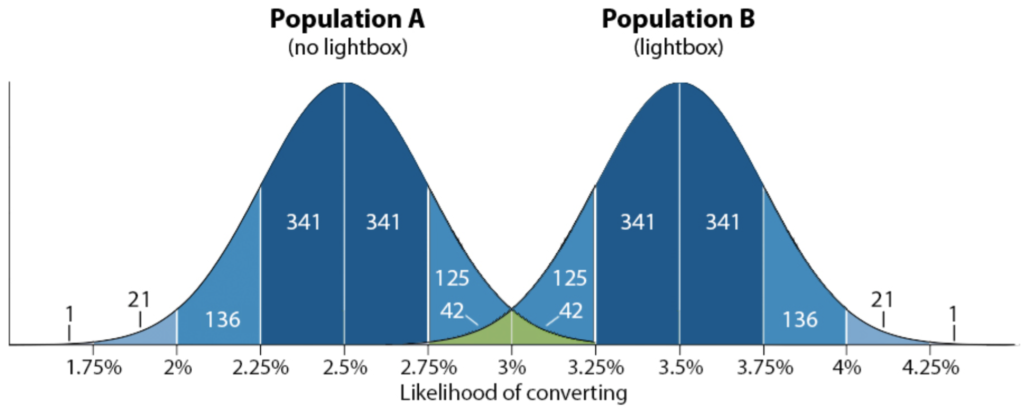

To demonstrate the effects of variability, let’s change your average quarterly conversion rates from 3%, 2%, 2.4% and 2.6% to 2.75%, 2.6%, 2.4% and 2.25%. This drops the variance from 1% to 0.5%.

What effect does that have on our results?

We still have a 40% improvement in conversion rate, but now our 2 populations have a lot less overlap.

Now, only about 84 of our 2,000 visitors fall into the 2.75-3.25% conversion rate category, leaving us with a 4.2% chance our results were a random twist of fate.

In practical terms, that means we can be 95.8% confident that adding a lightbox improved our conversion rate.

95.8%? It doesn’t seem very likely that our lightbox did better out of luck. Now we can “Wahoo!” with confidence!

Is 95% confidence good enough?

After knocking their heads together for a while, statisticians decided to set the bar for confidence at 95%. You may also see this reported as ρ-value (a ρ-value < 0.1 is the same thing as > 90% confidence, a ρ-value of < 0.05 is the same as > 95% confidence and so on).

As we’ve just discussed, there’s always some chance that your results are due to luck. In general, though, if you’re confident that at least 19 out of 20 tests will yield the same result, the statistical deities will accept the validity of your test.

That being said, confidence is a very subjective thing.

You might want to be 99.999% confident that your bungee rope isn’t going to break, but you might try a restaurant that only 30% of reviewers like.

The same idea is true for business decisions. Wasting money on a marketing strategy you are only 70% confident in might be acceptable where going to market with a drug you’re 95% confident doesn’t turn people into mass murders might not be such a great idea.

Now that you understand what confidence is and where it comes from, it’s up to you to decide what level of confidence makes sense for your tests.

While calculating confidence is usually a lot more complicated than what we showed here, the principles behind confidence are fairly universal. That’s good, because you can leave the calculations to the computer and focus your time on making the right decisions with your data.

3. Sample size

Still with me? Awesome! We’re through the worst of it.

Now that you’ve seen the interplay between variance and confidence, you’re probably wondering, How do I get low variance and high confidence?

The answer is traffic. Lots and lots of traffic.

Traffic increases the amount of data you have, which makes your tests more reliable and insightful.

In fact, the relationship between the quantity and reliability of your data is so consistent that it’s a bit of a statistical dogma: With enough data, you can find a connection between anything.

Here we go again…

Looking back at our last example, if you have 2,000 visitors per quarter and quarterly conversion rates of 3%, 2%, 2.4% and 2.6%, your average conversion rate for 8,000 visitors is 2.5%.

There’s some variability there, but your average for the year is still 2.5%.

But what would happen if you looked at your yearly conversion rate for four years instead of your quarterly conversion rate for four quarters?

Well, assuming that everything in your industry remained fairly stable, every year, your conversion rate would be fairly close to 2.5%.

You might see 2.55% one year and 2.45% another year, but overall, the variation in your yearly average would would be much smaller than the variation in your quarterly average.

Why? Bigger samples are less affected by unusual data.

Consider the following:

The average net worth in Medina, WA was $44 million in 2007. There are only 1,206 households in Medina, but 3 of the residents are Bill Gates, Jeff Bezos and Craig McCaw.

If Bill moved, the average net worth would drop to $6 million. If all 3 moved, average net worth would only be $224,000.

Source: Hair JF, Wolfinbarger M, Money AH, Samouel P, Page MJ. Essentials of business research methods. Routledge. 2015. p. 316. [print].

When you only look at the small population of Medina, WA, these 3 men have had a huge effect on the average net worth.

However, the average net worth in Washington is only $123,000.

In other words, even though these 3 men are worth $53.1 billion, in a state population of 7 million, their wealth barely affects the average net worth.

So, the bigger your sample size, the less your averages will be affected by extreme data.

What does that mean for my marketing?

If the populations you test are small, unusual groups like Bill and company will have a big effect on your variance.

For example, if 50 of your visitors are lightbox addicts who troll for lightboxes and compulsively convert whenever they find one, that’s going to have a dramatic effect on your conversion rate if you are only sampling from 2,000 visits.

Since you normally only get 50 conversions from 2,000 visitors (2.5% conversion rate, remember?), adding another 50 will have a huge effect on your variance.

Now, all of a sudden, your quarterly conversion rate is 10% instead of 2-3%.

On the other hand, if you have 200,000 visitors per quarter and the League of Lightbox Lovers shows up, the difference will be much more subtle.

Normally, you would get 5,000 conversions from 200,000 visitors, but this time you get 5,050.

Big whoop. Your conversion rate for this quarter is 2.525% instead of 2.5%. That falls well within your expected variance.

Do you see how sample size affects the reliability of your results?

The more data you have, the easier it is to tell if your change made a difference. Most people tend to orbit around average (back to the Bell curve, right?), so large traffic samples balance out the outliers and reduce the variance in your data.

Less variance means more confidence, so it’s an all around win!

How much traffic do I need?

It’s usually best to set up your tests based on conversions rather than overall site visits (unless that’s one of the things you’re testing, of course). That way, you know you’re getting enough conversions to make your data meaningful.

Just to give you a ballpark figure, it typically works well to shoot for at least 350-400 conversions per testing arm.

However, this range will change dramatically depending on your overall traffic volume.

I’ve seen companies that blow past this number in 20 minutes. Other sites take months or years to get that many conversions.

Scale your tests to the traffic volume that works for your site. If you’ve been testing for 3 months and have a consistent 40% improvement in conversion rate but only 60 conversions between the arms and 70% confidence, that might be enough to convince you to declare a winner.

After all, your data might look better after a year, but is that worth missing out on an extra 20 conversions per quarter while you wait?

Unfortunately, sample size can be one of the trickiest aspects of your tests to optimize. Depending on your goals, you can often best-guess things or just run your tests until you get what you need.

However, if you find yourself in a pinch, there are online calculators for determining what sample size you will need.

The most important takeaway from this section? The more data you have, the more reliable your results will be.

Conclusion

Variance. Confidence. Sample size. To truly understand your data and its implications, you need to understand each of these aspects and how they interact.

Variance acts as the gatekeeper for your data. To even be worth considering, your results need to exceed the normal variance of your website.

Once you’ve hit that benchmark, you need to determine how confident you are that your results aren’t simply due to chance.

Finally, if you want to minimize variance and maximize confidence, choose a sample size that’s big enough to be truly representative of your traffic and small enough to be achievable.

Okay, you made it! Let’s wrap up this post.

Now that you’ve got this knowledge under your belt, you’ll be surprised how often it comes in handy.

Just a word of warning, though, once you start saying things like, “Well, that data looks good, but what’s our normal variance?” you are likely to evoke awe in those around you. Might even land a promotion.

Like I said, math really is valuable…